Fireflies (Concentration Exercise)

NYU ITP

I worked in a group with Sam Krystal and Tianxu Zhou to create a concentration game where users open a jar of “fireflies” onto a digital screen and control the movement of the fireflies onscreen through their own transmitted brainwave data. We utilized a Muse EEG, p5.js animation visible through a projector, and a Bluetooth enabled jar trigger.

Role

Project Manager, Developer, Designer

Materials

Arduino, p5.js, Kinect, Bluetooth, OSC, Muse EEG, node.js

Timeline

6 Weeks

THE BACKGROUND

CONCENTRATION GAME

As humans, many of us have anxiety, and we thought our project could help people with anxieties feel in control. Originally, our piece reflected this change of state from anxious to calm based on the user’s feeling of control. As we progressed, we refined our project based on story and the usage of technologies.

This eventually led us to develop a game on concentration, an easier physiological state to monitor. We see this game as raising awareness of concentration and an interesting jumping-off point for neuroscience-related conversations.

THE PROCESS

PROJECT PLANNING

To help plan and communicate about our project over the 6 weeks, I created a Trello project management board, mapping out tasks week by week.

THE IDEATION

ROUND 1

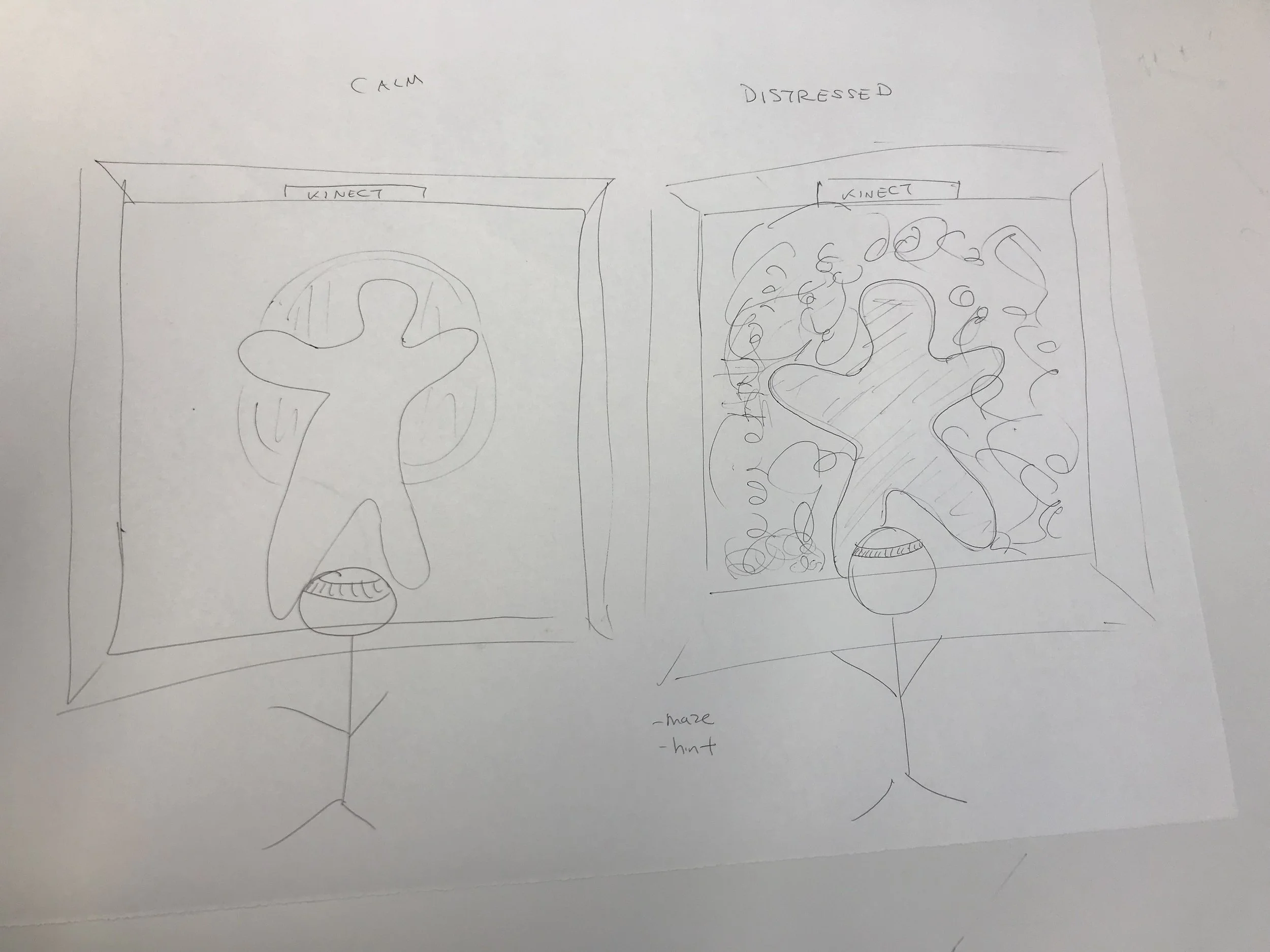

We wanted a user to transition between two states, calmness and anxiety, by meditating.

Using a Kinect, a user would see her silhouette onscreen. When anxious, the silhouette is a blob amongst a chaotic background and when calm, the human silhouette with a less chaos.

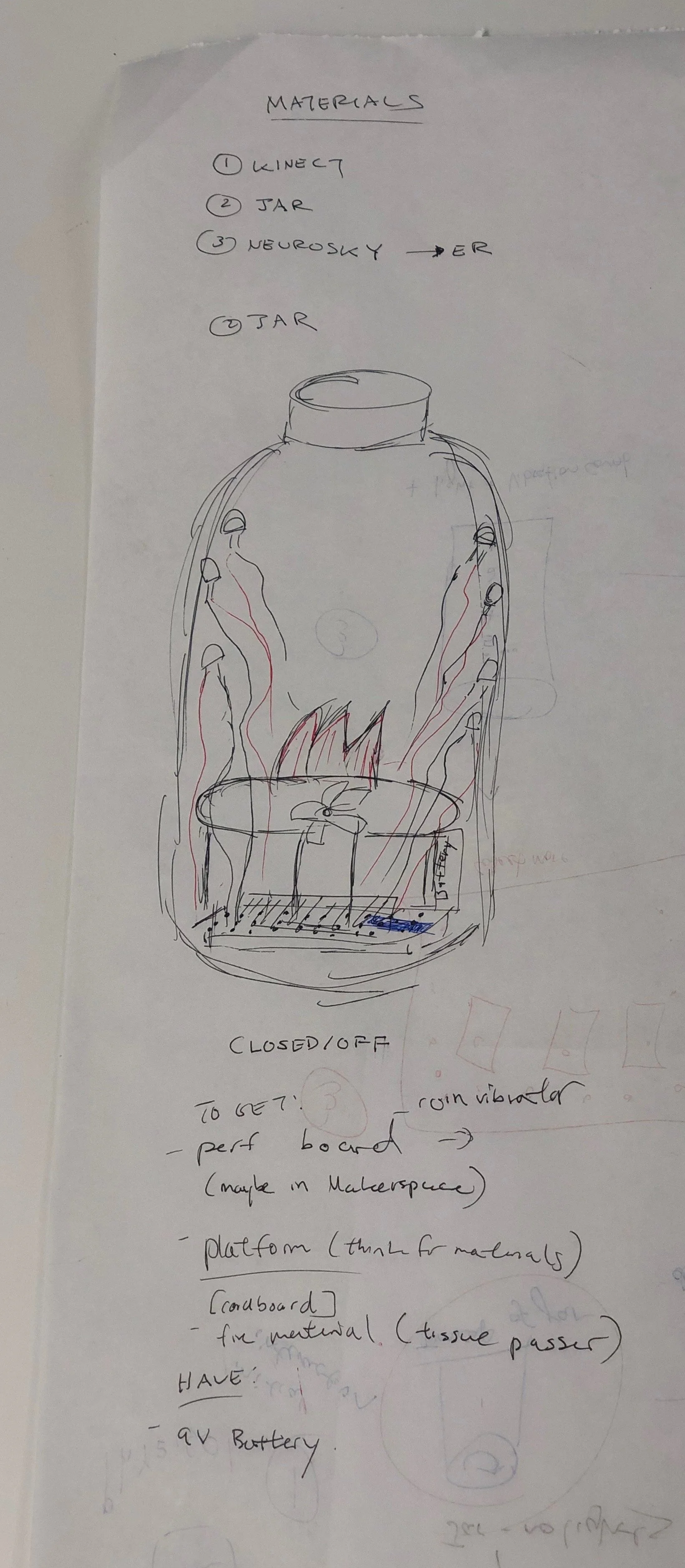

For the user to manipulate the background, we would use the Neurosky (device measuring electrical EEG brainwaves). By hacking the Neurosky, we would translate the data received fom the Neurosky to the animation, thereby influencing its movement.

ROUND 2

We received feedback to incorporate a talisman for a physical interaction. In our original idea, we planned to use smoke as the silhouette so that inspired us to think of nature.

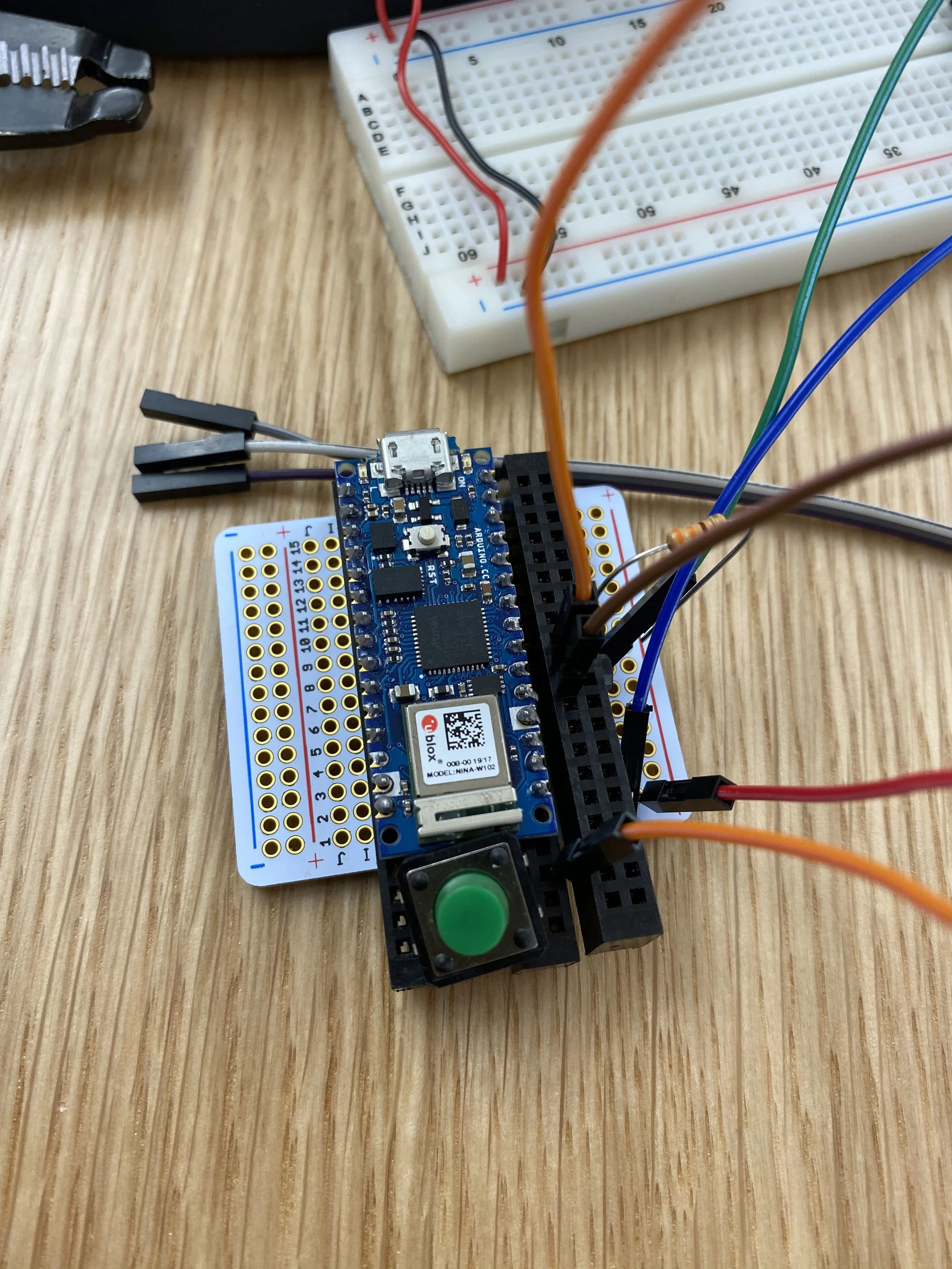

Growing up, some team members caught fireflies in our backyards. In our project, users would hold a mason jar and “catch” fireflies on a projected screen, utilizing interactions in the jar when caught. The opening of the lid would start the animation. To connect the jar to our animation we used an Arduino Nano, which has a built-in Bluetooth transmitter.

USER TESTING

INSIGHTS : ROUND 1

For user testing, we asked users to imagine their silhouette projected onto a p5.js firefly sketch while they wore a prototyped headband representing a Neurosky.

STORY CONFUSION

Users said seeing their smoke silhouette along with the fireflies confused them as what to do with the interaction.

From testing, we determined we had two different projects: silhouette and fireflies. We simplified our story to create our minimum viable product: obtaining brainwave data and translating it visually. We kept the idea of transitioning between two states, and adjusted to a more measurable state: concentration. Whether a user is concentrated or not determines two different visual states on the screen. Hacking the Neurosky would take up too much bandwidth so alternatively we used a Muse, a wearable brain sensing headband.

CATCHING FIREFLIES NOT A UNIVERSAL ACTIVITY

When given the mason jar, some users stood in front of the screen not knowing they were supposed to capture the flies.

Final user interaction flow

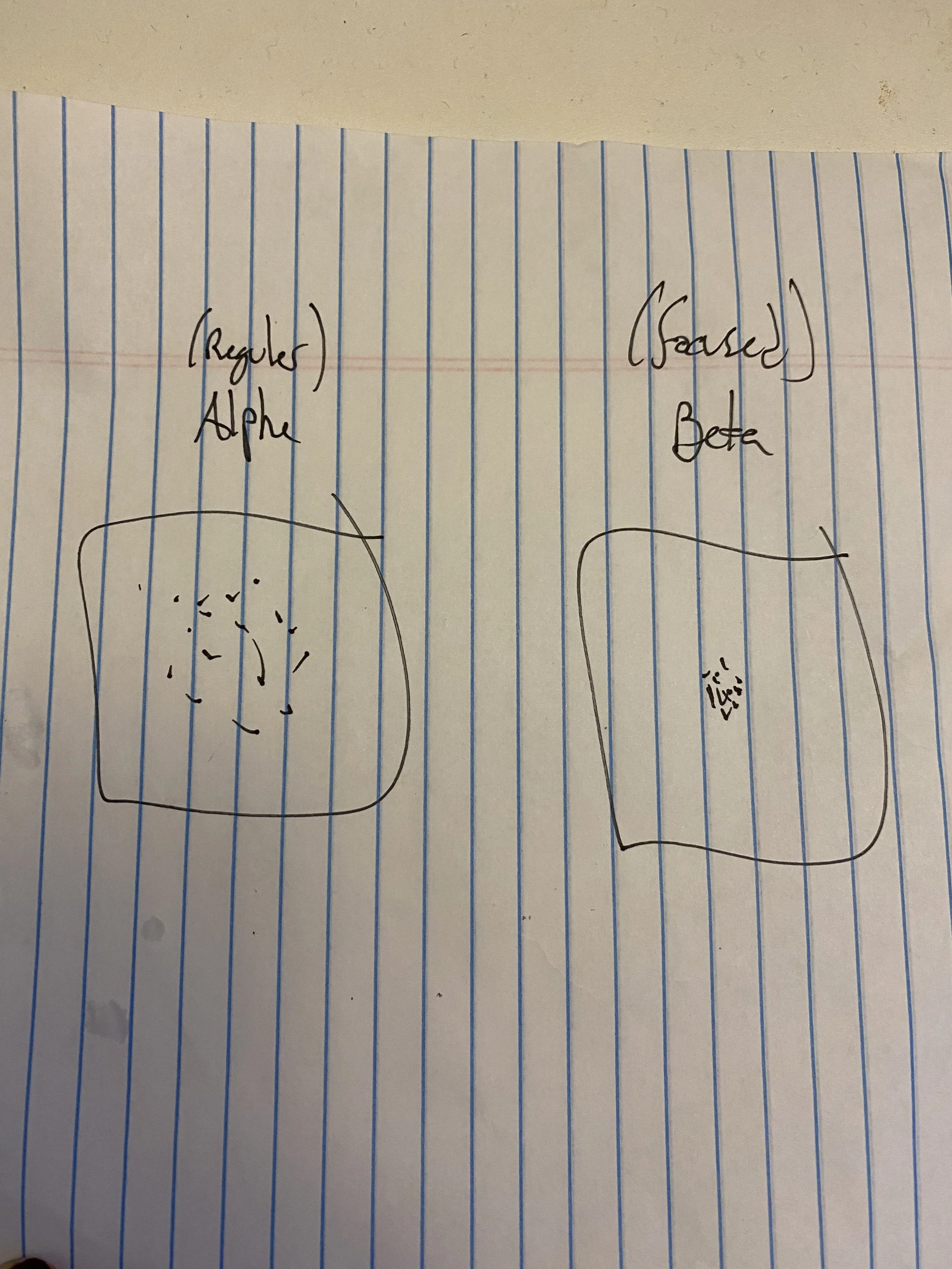

We discussed the concentration visuals of focused and unfocused. Sam has a neuroscience background so gave Tianxu and I a brief overview of the different EEG states. We sketched out two states: alpha and beta or “unfocused” and “focused”.

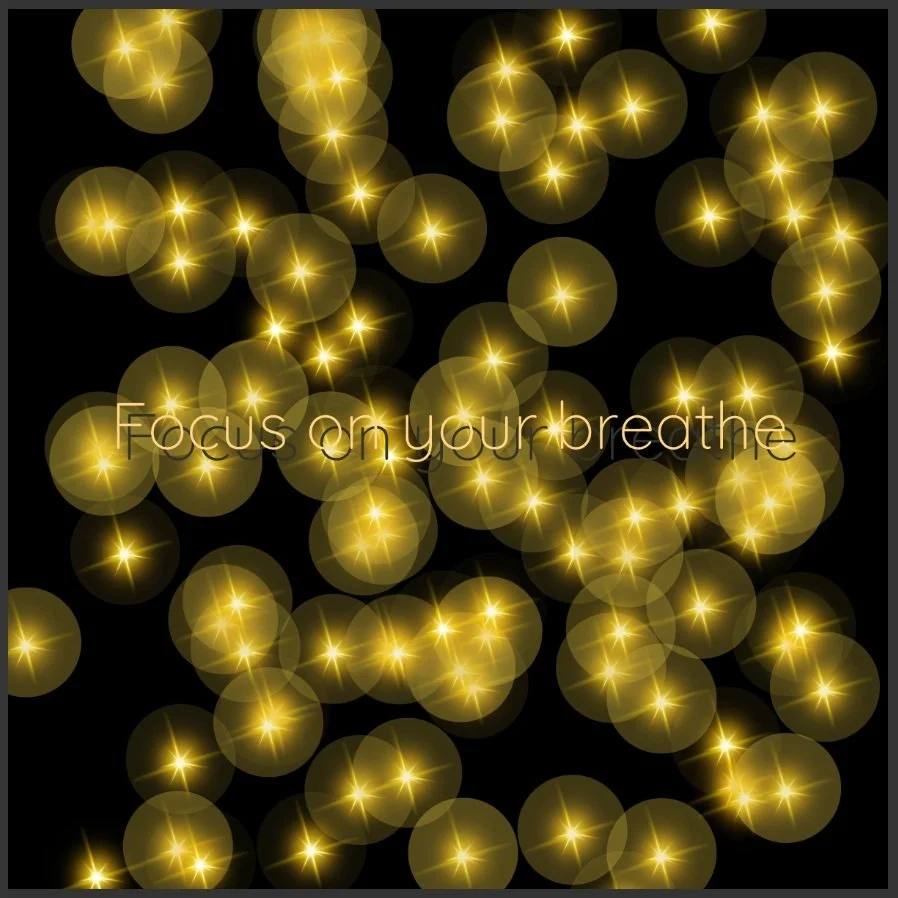

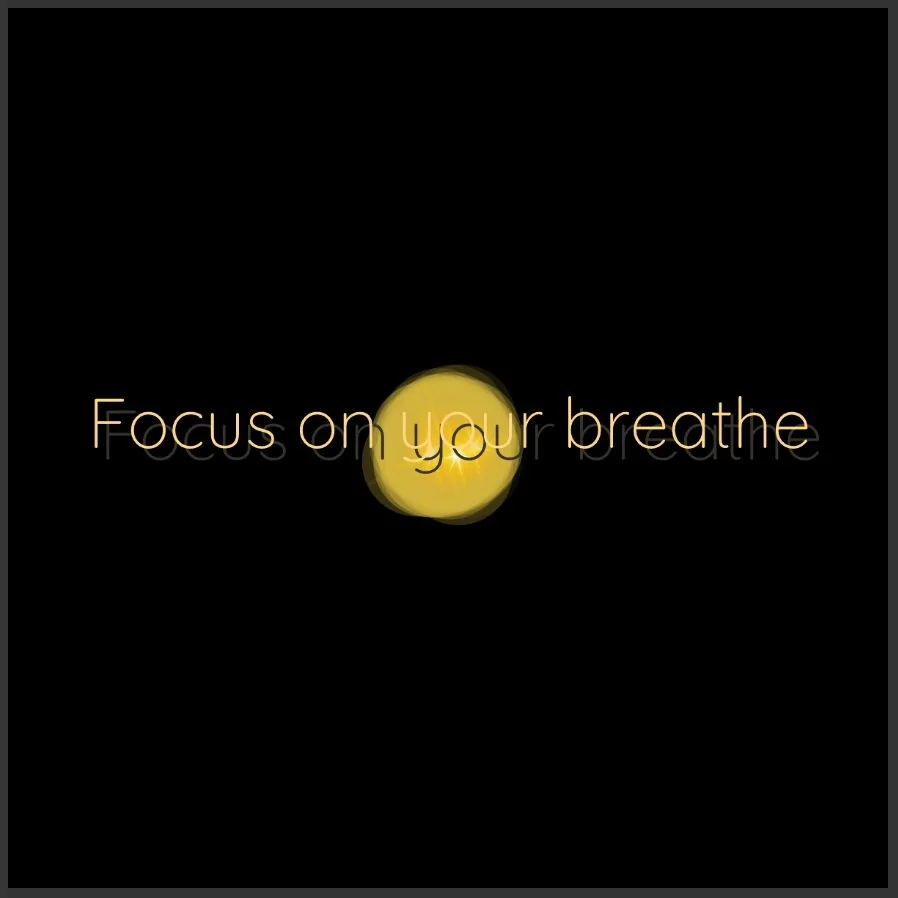

The unfocused state is randomly moving fireflies onscreen. The focused state is fireflies condensing in the middle.

THE PROTOTYPE

WHAT DOES “CONCENTRATION” LOOK LIKE

PROTOTYPE EVOLUTION

From the sketch, I worked in p5.js to make the visualization come to life onscreen.

PROTOTYPE 1

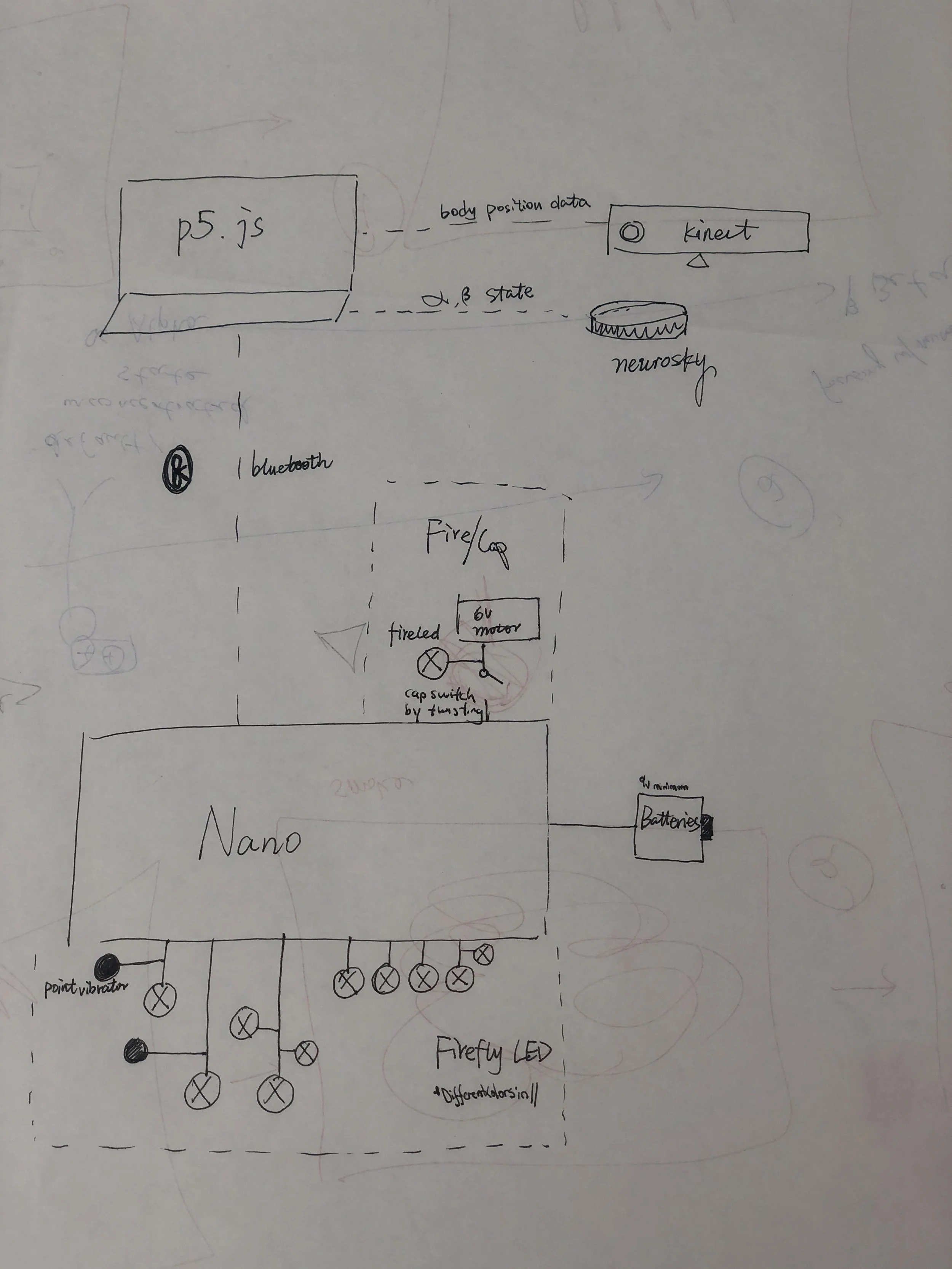

To obtain Muse EEG data for our animation, we needed a connection between the two. Using a mobile application, “Muse Monitor”, the app transmits live Muse data using Open Sound Control (OSC) protocol to a Processing sketch. We then inputted the OSC feedback from Processing to a local p5.js animation.

Trello project planning

Jar Enclosure and Structure

REFINING THE VISUALIZATION

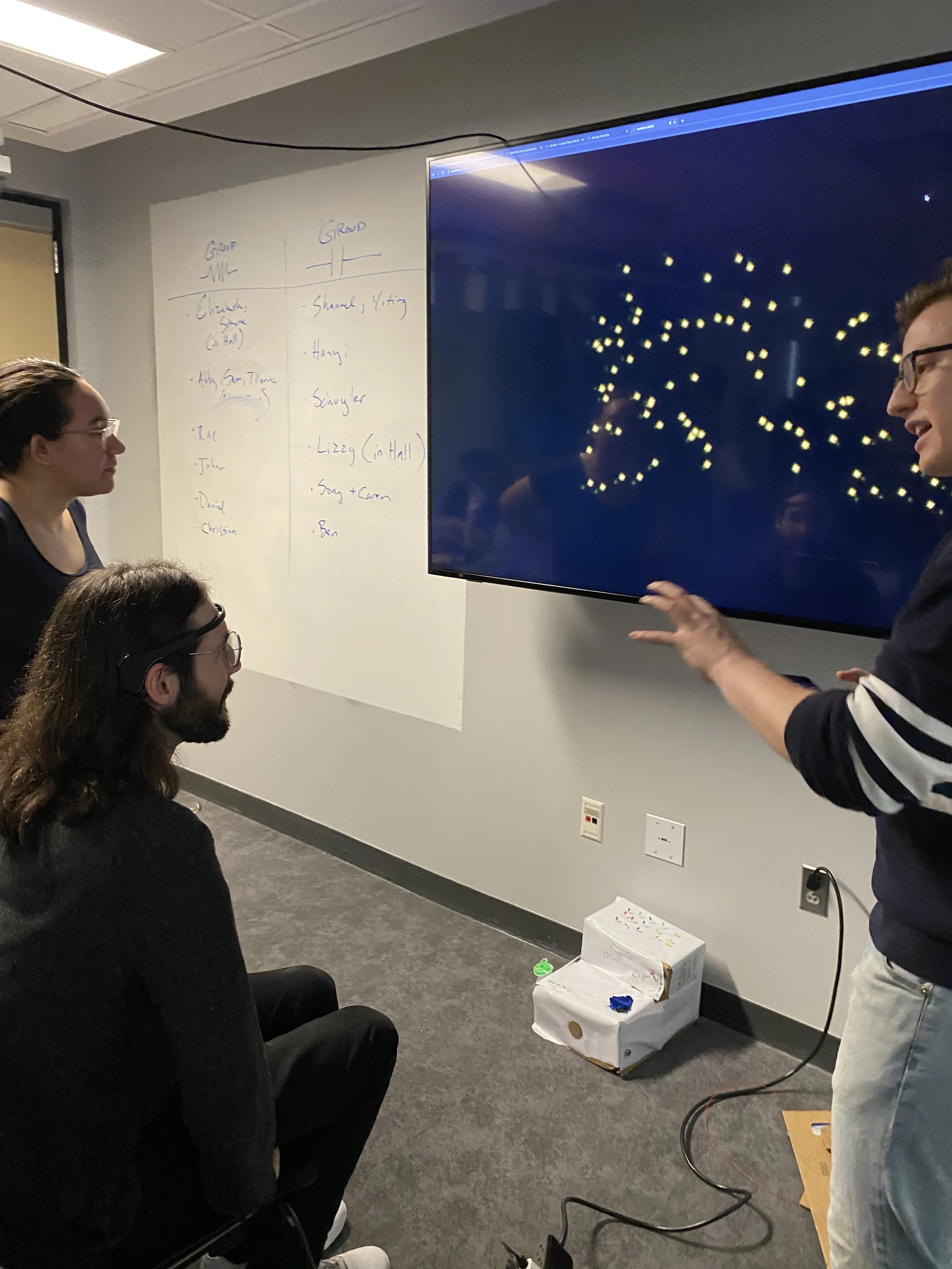

During user testing, I surveyed classmates to find what they were thinking of when focusing. Some answers were - looking at a specific point onscreen, looking at the time. So, I added randomized prompts to the screen to guide user interaction. To make the fireflies more realistic, I added flickering transparent orbs. To give more indication of the state change, we decided to add sound.

Original project sketch

Sketch of what the prototype should be : focused and unfocused states

PROTOTYPE 3

USER TESTING

INSIGHTS : ROUND 2

Playtesting 3

USER PROMPT

Instead of explanation step by step, on onscreen prompt would be helpful.

Playtesting 1

PUTTING IT ALL TOGETHER

DEVICE COMMUNICATION

PROTOTYPE 2

SHOW DAY

We presented our piece during the ITP Winter Show 2019. It was successful, as people were able to understand the interaction and also ask us deeper questions about concentration and the validity of using the Muse as a tool for concentration. Here are some interactions from that day.

Playtesting 2

We programmed the Arduino Nano with a Bluetooth library, capable of interfacing with our p5 animation. Our Nano is attached to a button, triggered by the removal of the jar’s lid. When the lid of our jar is removed, the p5 animation plays and when the lid is put on, the animation stops.

Muse monitor interface

Arduino nano and push button

We had a physical “fireflies” in a jar, which were Neopixel lights. When the jar is closed, the Neopixels are on and when the lid is off the Neopixels turn off and the animation begins.

We discovered that delays created by Neopixel code can interfere with Bluetooth signal transmission. We broke some Arduino Nanos determing an optimal power source for our jar.

Jar switch and neopixels

Communication devices schematic

FINAL USER FLOW

Design

Code

For prototyping, I used MouseX’s position to transition between unfocused and focused. Eventually, we swapped out MouseX with Muse data, coding a threshold of brainwave data for state change. Initially, the fireflies abruptly transitioned between the states. To solve this, I used lerp() for a smoother transition.

Once the fireflies were in the center, I had a hard time reversing their migration pattern. In the center, the fireflies “this.x, this.y” coordinates updated and started moving from the center. I created a home state to indicate the unfocused state the fireflies should return to.

To control the interaction of fireflies coming on/off screen, I used mouseClicked as the prompt to switch between states. Inside the mouseClicked function is a boolean state. Eventually the boolean state would be controlled when a user would open/close a jar, communicated via Bluetooth. When a user opens the jar, fireflies will appear onscreen and when the user closes the jar, fireflies disappear offscreen.

FIREFLY VISUALIZATION UNCLEAR

It wasn’t clear the floating objects were fireflies.